Influential Statistician Dr. Hadley Wickham Visits DC and Presents on R Code Organization

September 17, 2015 •David Kretch

Last night, Summiteers joined Statistical Programming DC for Dr. Hadley Wickham's talk on creating fluent interfaces for R. As the creator of many popular and influential R packages, including ggplot2, plyr, reshape2, dplyr, and tidyr, Dr. Wickham is an authority on developing for R in a readable, reusable, and practical fashion.

(If you haven’t heard of him, check out this profile: “Hadley Wickham, the Man Who Revolutionized R.”)

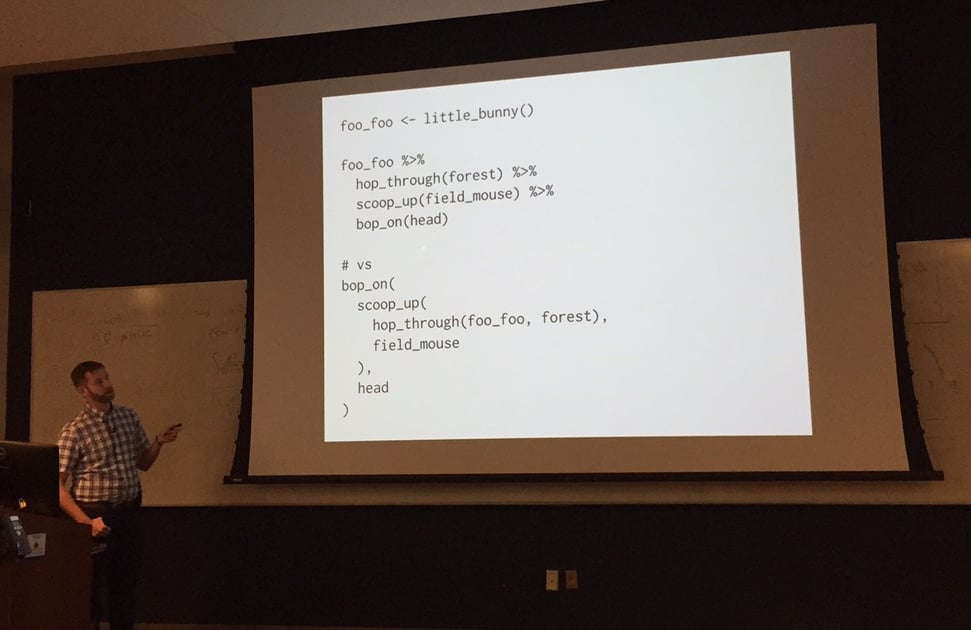

The focus of Dr. Wickham's presentation was creating readable, reproducible data analysis programs using a technique called piping. Piping, written in R as ‘%>%’, moves data from one function to the next, like the name implies.

For example,

foo_foo %>%

hop_through(forest) %>%

scoop_up(field_mouse) %>%

bop_on(head)

is equivalent to writing

bop_on(scoop_up(hop_through(foo_foo, forest), field_mouse), head)

But the version using piping is much easier to read. Using piping, we can construct complex and readable data analysis programs out of simpler building block functions; piping frees us from having to figure out how to join our blocks together so we can focus on the blocks themselves.

To take advantage of pipes, our functions should have three qualities: purity, predictability, and pipeability.

Purity

Functions should be pure, which is computer science jargon meaning their output depends only on their input and their operation does not change the state of the world. Pure functions are easier to reason about since they can be considered in isolation. For example, the value of sum(1, 2) depends only on the 1 and 2 provided to the sum function; you don’t need to know anything else.

Examples of impure functions are those that read or write from disk, set options, and generate random numbers. You can’t entirely avoid these, but operations that require impure functions make up only a limited portion of typical data analysis tasks.

Predictability

Related functions should be consistent with each other: consistent names, argument orders, output object types, etc. Predictability means we only have to learn one convention to apply it many places. It makes syntax easier to learn, easier to teach, and easier to read.

Dr. Wickham notes that it’s not always possible to be consistent on all axes. For example, we might have three functions: one takes a dataset and a filename as an argument, one takes a filename as an argument, and one takes a dataset as an argument. We can't consistently make filenames or datasets first in our argument order! Instead, we can be consistent in our priorities and how principles of ordering take precedence.

Pipeability

Functions should accept the object being transformed as their first argument and return an object of the same type. This allows us to chain together our functions with pipes and leverage all other code written to be used with pipes.

By making our functions conform to these guidelines, our code is easier to write and easier to understand.

Thank you to Dr. Wickham for visiting D.C. and sharing such a useful presentation! If you’d like to learn more about his work, check out his website, follow him on Twitter, or browse his popular GitHub.

Get Updates

Featured Articles

Categories

- affordable housing (12)

- agile (3)

- AI (4)

- budget (3)

- change management (1)

- climate resilience (5)

- cloud computing (2)

- company announcements (15)

- consumer protection (3)

- COVID-19 (7)

- CredInsight (1)

- data analytics (82)

- data science (1)

- executive branch (4)

- fair lending (13)

- federal credit (36)

- federal finance (7)

- federal loans (7)

- federal register (2)

- financial institutions (1)

- Form 5500 (5)

- grants (1)

- healthcare (17)

- impact investing (12)

- infrastructure (13)

- LIBOR (4)

- litigation (8)

- machine learning (2)

- mechanical turk (3)

- mission-oriented finance (7)

- modeling (9)

- mortgage finance (10)

- office culture (26)

- opioid crisis (5)

- Opportunity Finance Network (4)

- opportunity zones (12)

- partnership (15)

- pay equity (5)

- predictive analytics (15)

- press coverage (3)

- program and business modernization (7)

- program evaluation (29)

- racial and social justice (8)

- real estate (2)

- risk management (10)

- rural communities (9)

- series - loan monitoring and AI (4)

- series - transforming federal lending (3)

- strength in numbers series (9)

- summer interns (7)

- taxes (7)

- thought leadership (4)

- white paper (15)