Assessing Evaluability: Selecting the Right Type of Program Evaluation

October 26, 2015 •Ed Dieterle

In the first post of our blog series on program evaluation, Summit’s Program Evaluation Team explored the importance of program evaluation in relation to Federal Departments and Federal Budgets. In our second post, we provided an overview of logic models, which depict how a program or policy solves an identified problem under specified conditions. In the current post, we discuss how to select the right type of evaluation given a program or policy’s maturity.

Nothing good comes from selecting an evaluation method and then force-fitting a program or policy to it. It would be like walking into a clothing store with your eyes closed and then picking up a random item to buy. What ended up in your hands may or may not fit, be your style, or be in your price range. Here at Summit, we advocate for evaluation designs that are driven by:

- Program/policy maturity

- Intended use of the results

- Known costs, benefits, and risks

After developing a program logic model, evaluators and stakeholders (i.e. individuals or organizations who have a stake in a program or policy) complete an evaluability assessment (EA) to determine: (1) how the results will be used, (2) what the known costs, benefits, and risks are, (3) what the most appropriate evaluation method or methods are, and (4) what data are available and how the data will be managed.

How will the results be used?

Results generated from an evaluation help stakeholders make wiser decisions. Before delving into evaluation questions, methods, or costs, it is important for evaluators and stakeholders to agree on the purpose of the evaluation and how the results will be used. In the 2014 Economic Report of the President, for example, the Council of Economic Advisers clearly states that results from evaluations would inform Federal budgets to (a) improve its programs; (b) scale up the approaches that work best; and (c) discontinue those that are less effective. Program evaluations are not a luxury; a reliable evaluation can mean the difference between continued project funding and a cancelled project.

What are the costs, benefits, and risks?

Before an evaluation effort begins, evaluators and stakeholders should consider the costs, benefits, and risks associated with the evaluation. The benefits—which should align closely with how the results will be used—should outweigh the resources (i.e. time, money, burden) required to complete the evaluation. All research and evaluation that involves human participants involves risk.[1] A trained evaluator can explain common risks and how to mitigate them. For example, he or she should be able to explain participant privacy and data security protocols.

What is the most appropriate evaluation method?

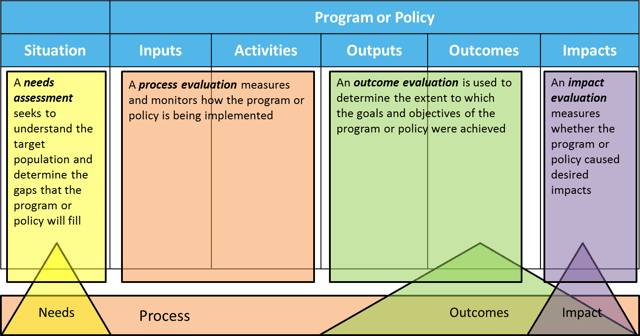

As Light, Singer, and Willett (1990) caution, “You can’t fix by analysis what you bungled by design.”[2] A successful evaluation design aligns purpose and method while considering costs, benefits, and risks. As shown in Figure 1, there is a clear progression of methodological choice, from determining the target population and understanding the gaps that a program or policy will fill to measuring impact, which involves calculating whether a program or policy caused desired impacts. Working with a trained evaluator, stakeholders can determine which method (or combination of methods) is most appropriate to the program or policy, including questions that can be answered in the near term and over time.

Figure 1. Generic program logic model by evaluation type with descriptions of each evaluation method.

What data are available and how will the data be managed?

A trained evaluator can explain data preparation, blending (i.e. stitching together one or more data sets), and storage. Data are the lifeblood of an evaluation study. Without relevant, high-quality data, the entire value of a program evaluation is questionable, even with otherwise rigorous designs and large sample sizes. Working with stakeholders, evaluators can determine the quality of the data available for analysis and set expectations.

Summit is experienced in using commercial cloud computing services to handle big computing and data storage challenges. These services give us access to functionally unlimited data storage and analytical capacity. Cloud computing provides the ability to dynamically and efficiently scale infrastructure based on each study’s needs. [3]

Next week, we will discuss how to use administrative data in program evaluation. Administrative data are data not collected for research purposes, but for recordkeeping: tracking participants, registrants, employers, or transactions.

Special thanks to Natalie Patten and Balint Peto for their assistance in writing this blog post.

References

Light, Richard J., Judith D. Singer, and John B. Willett. By Design: Planning Research on Higher Education. Cambridge, MA: Harvard University Press, 1990.

Mell, Peter, and Timothy Grance. “The Nist Definition of Cloud Computing: Recommendations of the National Institute of Standards and Technology.” Gaithersburg, MD: U.S. Department of Commerce, National Institute of Standards and Technology, 2011.

National Research Council. Proposed Revisions to the Common Rule for the Protection of Human Subjects in the Behavioral and Social Sciences. Washington, DC: National Academies Press, 2014.

[1] For more on the Common Rule, the baseline standard of ethics by which government-funded research is held, see National Research Council, Proposed Revisions to the Common Rule for the Protection of Human Subjects in the Behavioral and Social Sciences (Washington, DC: National Academies Press, 2014). http://www.nap.edu/catalog.php?record_id=18614.

[2] Richard J. Light, Judith D. Singer, and John B. Willett, By Design: Planning Research on Higher Education (Cambridge, MA: Harvard University Press, 1990). http://www.hup.harvard.edu/catalog.php?isbn=9780674089310.

[3] For more on cloud computing, see Peter Mell and Timothy Grance, “The Nist Definition of Cloud Computing: Recommendations of the National Institute of Standards and Technology,” (Gaithersburg, MD: U.S. Department of Commerce, National Institute of Standards and Technology, 2011). http://faculty.winthrop.edu/domanm/csci411/Handouts/NIST.pdf.

Get Updates

Featured Articles

Categories

- affordable housing (12)

- agile (3)

- AI (4)

- budget (3)

- change management (1)

- climate resilience (5)

- cloud computing (2)

- company announcements (15)

- consumer protection (3)

- COVID-19 (7)

- CredInsight (1)

- data analytics (82)

- data science (1)

- executive branch (4)

- fair lending (13)

- federal credit (36)

- federal finance (7)

- federal loans (7)

- federal register (2)

- financial institutions (1)

- Form 5500 (5)

- grants (1)

- healthcare (17)

- impact investing (12)

- infrastructure (13)

- LIBOR (4)

- litigation (8)

- machine learning (2)

- mechanical turk (3)

- mission-oriented finance (7)

- modeling (9)

- mortgage finance (10)

- office culture (26)

- opioid crisis (5)

- Opportunity Finance Network (4)

- opportunity zones (12)

- partnership (15)

- pay equity (5)

- predictive analytics (15)

- press coverage (3)

- program and business modernization (7)

- program evaluation (29)

- racial and social justice (8)

- real estate (2)

- risk management (10)

- rural communities (9)

- series - loan monitoring and AI (4)

- series - transforming federal lending (3)

- strength in numbers series (9)

- summer interns (7)

- taxes (7)

- thought leadership (4)

- white paper (15)