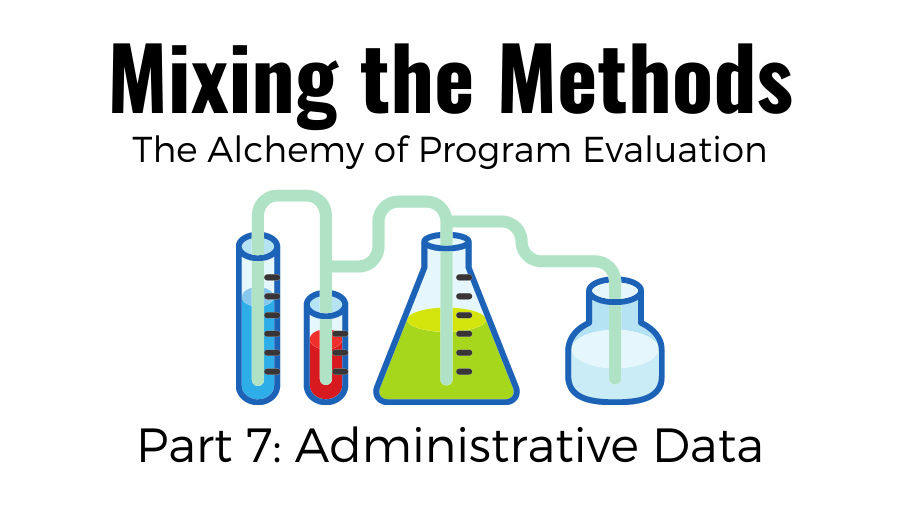

The Alchemy of Program Evaluation, Part 7: Administrative Data

May 17, 2021 •Natalie Patten

Welcome back to our blog series on program evaluation. If you haven’t already, check out last week’s post on logic and theory of change models. In this installment, we will look at using administrative data in program evaluation.

Administrative data are collected for an organization’s operational purposes. They can include personnel, service delivery, demographic, or financial data and are typically not collected for research or evaluation purposes. In the last few decades, however, federal and state agencies have increasingly used administrative data to conduct program evaluations and research. The data provide a readily available, cost-effective source of information that enhances an organization’s evidence-based decision-making.

Still, administrative data are not without their drawbacks. Researchers must perform extensive data-quality reviews and clean the data before analysis. The data often have serious data-quality issues, including duplicate cases, invalid values, incorrect formatting, internal inconsistencies, outlier values, and missing information. Absent quality reviews and data cleaning, these issues can make the data difficult to use for analysis and, even more important, can compromise the reliability of analysis results. Any data-quality issues that cannot be resolved through data editing, imputation (replacing missing data with substituted values), or deletion should be appropriately caveated in the analysis report and explained in the data documentation.

Often, administrative data are an important but insufficient source of information for analysis and evaluation, and researchers must leverage external data sources to supplement them. For example, administrative data may tell you the firms with the highest number of minimum-wage violations. However, you may want to use external data sources to capture firm characteristics that correlate with high violation rates. External data sources could include publicly available data, such as demographic data from the U.S. Census or the American Community Survey; financial data, from firms like Experian or Dun & Bradstreet; or industry data.

Administrative data are generally observational, rather than created through an experimental design. In other words, there is rarely a random component to administrative data that would allow us to conduct a randomized controlled trial to determine the causal impact of a treatment. In some research projects, we only use administrative data to conduct descriptive statistics or data visualizations because we’ve gathered information through surveys, focus groups, and semi-structured interviews that help us answer other research questions. For example:

- In a succession planning study, researchers might use personnel data to determine the number and percentage of employees who are eligible for retirement in the next 5 years.

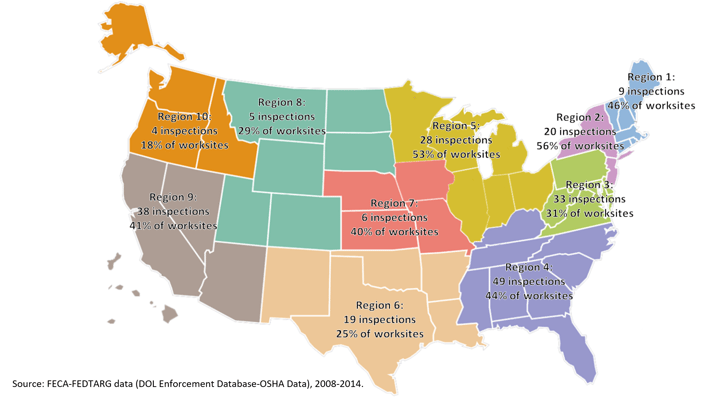

- In an evaluation of a work-site safety inspection program, researchers might use violations data to inform them of the number of inspections by region and provide a visualization like the one below.

Researchers can also use data for statistical testing and regression analysis. In an investigation of whether a lending institution is participating in discriminatory lending practices, researchers may use their loan application and origination data to determine if there are statistically different outcomes for minority borrowers versus nonminority borrowers.

If the goal of a program evaluation is to measure causal impacts, administrative data can still be useful using quasi-experimental methods, such as regression discontinuity design or propensity score modeling. These and other outcome and impact analyses will be discussed in the next blog post.

Administrative data can be a rich and cost-effective source of data for program evaluations and other research. Despite its challenges, it can be useful for baseline analyses, statistical testing, and even causal impacts to help drive evidence-based decision making.

Join us next week, when we’ll talk about impact evaluations.

Get Updates

Featured Articles

Categories

- affordable housing (12)

- agile (3)

- AI (4)

- budget (3)

- change management (1)

- climate resilience (5)

- cloud computing (2)

- company announcements (15)

- consumer protection (3)

- COVID-19 (7)

- CredInsight (1)

- data analytics (82)

- data science (1)

- executive branch (4)

- fair lending (13)

- federal credit (36)

- federal finance (7)

- federal loans (7)

- federal register (2)

- financial institutions (1)

- Form 5500 (5)

- grants (1)

- healthcare (17)

- impact investing (12)

- infrastructure (13)

- LIBOR (4)

- litigation (8)

- machine learning (2)

- mechanical turk (3)

- mission-oriented finance (7)

- modeling (9)

- mortgage finance (10)

- office culture (26)

- opioid crisis (5)

- Opportunity Finance Network (4)

- opportunity zones (12)

- partnership (15)

- pay equity (5)

- predictive analytics (15)

- press coverage (3)

- program and business modernization (7)

- program evaluation (29)

- racial and social justice (8)

- real estate (2)

- risk management (10)

- rural communities (9)

- series - loan monitoring and AI (4)

- series - transforming federal lending (3)

- strength in numbers series (9)

- summer interns (7)

- taxes (7)

- thought leadership (4)

- white paper (15)