Advantages of Tree-Based Modeling

August 21, 2018 •Anoushka Patel

Tree-based modeling is an excellent alternative to linear regression analysis. But what makes it so advantageous? Tree-based models:

- Can be used for any type of data, whether they are numerical (i.e. number calories in cereal) or categorical (i.e. manufacturer of cereal)

- Can handle data that are not normally distributed (normal data are symmetric, bell-shaped, and centered at the mean; however, most data do not follow this type of distribution)

- Are easy to represent visually, making a complex predictive model much easier to interpret

- Require little data preparation because variable transformations are unnecessary

How Tree-Based Modeling Works

There are two types of tree-based models: regression and classification. A regression tree is used for a continuous dependent variable, such as calories in cereal, and a classification tree is used for a categorical dependent variable, such as manufacturer of cereal.

Decision trees split from the top down, grouping data into the most homogeneous “sub-nodes” based on their characteristics. While the algorithms vary based on the type of tree, the goal is to split the data so that the sub-nodes are as uniform as possible. This process can continue until the splits result in one “leaf” per observation. However this may result in few data points and a model that is not very useful. You can end a decision tree by either (1) setting a constraint to indicate when splitting should be complete or (2) “pruning,” which gets rid of sub-nodes that hold little statistical power.

Example of a Tree Based Model

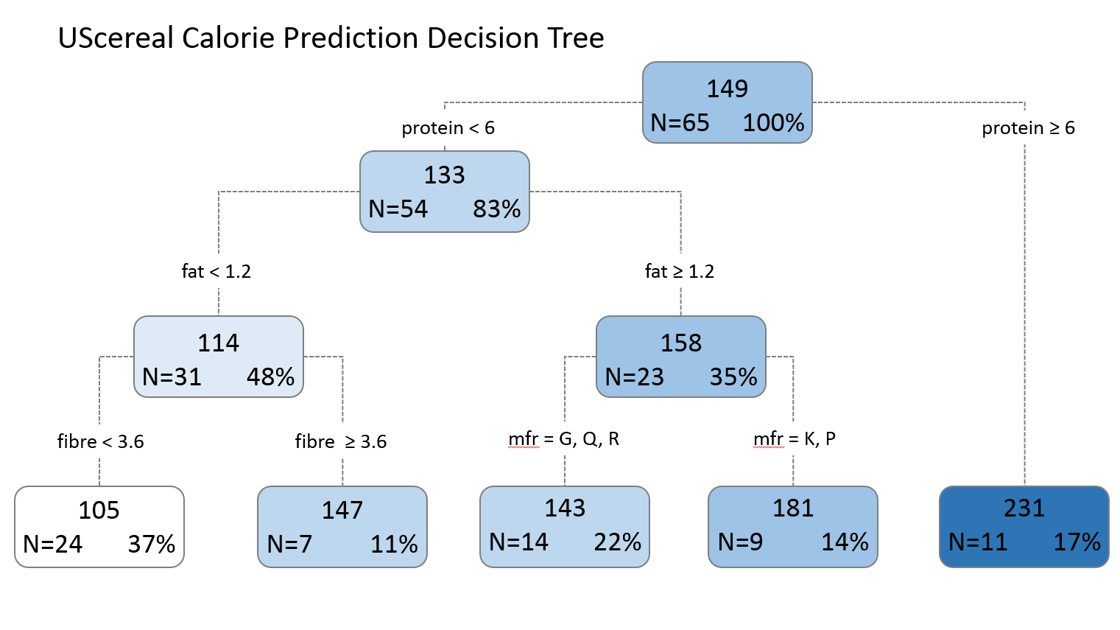

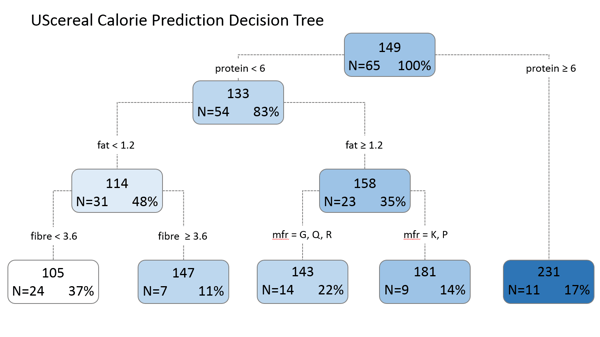

We created a decision tree using UScereal data from the MASS package in R, which includes information on types of cereals and their nutritional content. For this model we use the variables protein, fat, fiber, carbohydrates, and manufacturer to predict the number of calories in cereal.

A test for normality, the Shapiro-Wilk test, shows that these data are not normal. However, this does not violate any assumptions for the decision tree or affect interpretation of results, illustrating a key advantage of tree-based models.

In this visualization, the first split is the protein amount at the value of 6. This shows that cereals with a protein content of 6 grams or more have the highest levels of calories. For cereals with a protein content of less than 6 grams, additional factors such as fat, fiber, and carbohydrates also contribute to total calories, with fat having the most influence. These additional sub-divisions can be observed in the sub-nodes of the tree.

More complex tree-based models, such as random forests and boosted trees, incorporate multiple decision trees. Random forests combine the results from multiple decision trees to create a single model with a more accurate estimate. Boosted trees create simple, binary trees that are built from the preceding tree, eventually resulting in a good fit between the independent and dependent variables. Decision trees are merely one building block for creating more accurate predictive models.

References

6.5.1. What do we mean by "Normal" data? (n.d.). Retrieved from Engineering Statistics Handbook: https://www.itl.nist.gov/div898/handbook/pmc/section5/pmc51.htm

Analytics Vidhya Content Team. (2016, April 12). A Complete Tutorial on Tree Based Modeling from Scratch (in R & Python) . Retrieved from Analytics Vidhya: https://www.analyticsvidhya.com/blog/2016/04/complete-tutorial-tree-based-modeling-scratch-in-python/#two

Deshpande, B. (2011, July 12). 4 key advantages of using decision trees for predictive analytics. Retrieved from Simafore: http://www.simafore.com/blog/bid/62333/4-key-advantages-of-using-decision-trees-for-predictive-analytics

Introduction to Boosting Trees for Regression and Classification. (n.d.). Retrieved from TIBCO Software: http://www.statsoft.com/Textbook/Boosting-Trees-Regression-Classification

Koehrsen, W. (2017, December 27). Random Forest Simple Explanation. Retrieved from Medium: https://medium.com/@williamkoehrsen/random-forest-simple-explanation-377895a60d2d

What do we mean by "normal" data? (n.d.). Retrieved from Engineering Statistics Handbook: https://www.itl.nist.gov/div898/handbook/pmc/section5/pmc51.htm

Get Updates

Featured Articles

Categories

- affordable housing (12)

- agile (3)

- AI (4)

- budget (3)

- change management (1)

- climate resilience (5)

- cloud computing (2)

- company announcements (15)

- consumer protection (3)

- COVID-19 (7)

- CredInsight (1)

- data analytics (82)

- data science (1)

- executive branch (4)

- fair lending (13)

- federal credit (36)

- federal finance (7)

- federal loans (7)

- federal register (2)

- financial institutions (1)

- Form 5500 (5)

- grants (1)

- healthcare (17)

- impact investing (12)

- infrastructure (13)

- LIBOR (4)

- litigation (8)

- machine learning (2)

- mechanical turk (3)

- mission-oriented finance (7)

- modeling (9)

- mortgage finance (10)

- office culture (26)

- opioid crisis (5)

- Opportunity Finance Network (4)

- opportunity zones (12)

- partnership (15)

- pay equity (5)

- predictive analytics (15)

- press coverage (3)

- program and business modernization (7)

- program evaluation (29)

- racial and social justice (8)

- real estate (2)

- risk management (10)

- rural communities (9)

- series - loan monitoring and AI (4)

- series - transforming federal lending (3)

- strength in numbers series (9)

- summer interns (7)

- taxes (7)

- thought leadership (4)

- white paper (15)